Building real-time voice AI applications used to require deep expertise in audio processing, speech recognition, natural language understanding, and text-to-speech synthesis. Developers had to stitch together disparate APIs, manage complex state machines, and handle the intricacies of real-time streaming. Then came Pipecat—an open-source Python framework that is fundamentally changing how developers build voice AI agents.

What is Pipecat?

Pipecat is an open-source framework designed specifically for building voice and multimodal conversational AI applications. Created by Daily, the same team behind enterprise-grade video and audio infrastructure, Pipecat provides a clean abstraction layer that lets developers focus on conversation logic rather than plumbing.

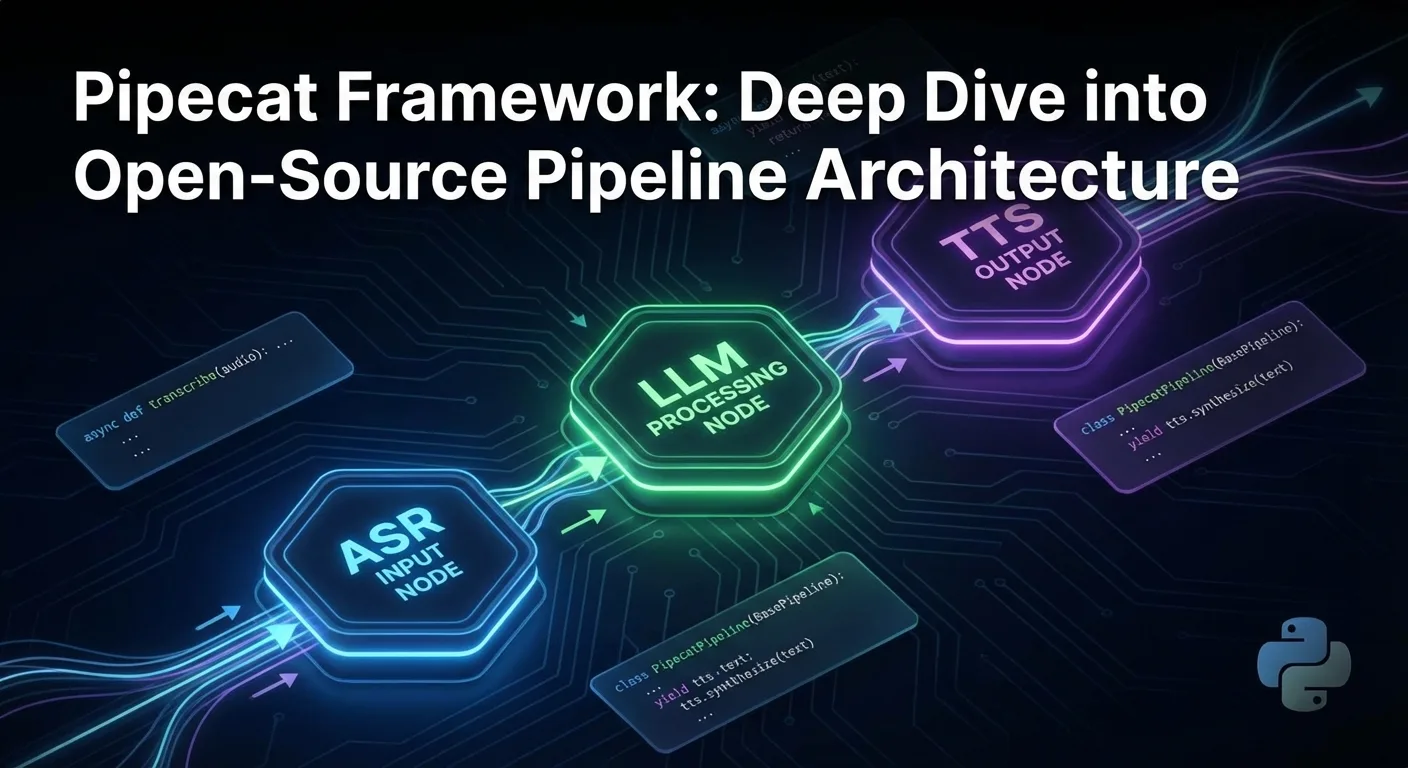

At its core, Pipecat treats voice AI as a pipeline of processors. Audio comes in, gets transcribed, processed by an LLM, converted back to speech, and sent out—all in real-time with minimal latency. This pipeline architecture makes the framework incredibly flexible and extensible.

The Pipeline Architecture Explained

Traditional voice AI development involves manually coordinating multiple services:

- Capturing and streaming audio from WebRTC

- Sending audio chunks to a speech-to-text service

- Collecting transcripts and forwarding to an LLM

- Streaming LLM responses to text-to-speech

- Playing synthesized audio back to the user

Pipecat abstracts this into a declarative pipeline where each component is a "processor" that transforms data flowing through the system. You define what services you want to use, configure them, and Pipecat handles the orchestration.

Key Pipeline Components

Transport Layer: Pipecat supports multiple transport mechanisms including WebRTC (via Daily), WebSocket, and local audio. This flexibility means you can deploy the same voice agent across web applications, phone systems, or embedded devices.

Speech-to-Text Processors: Choose from Deepgram, OpenAI Whisper, Azure Speech, Google Cloud Speech, or AssemblyAI. Switching providers is often a one-line configuration change.

LLM Processors: Integration with OpenAI GPT-4, Anthropic Claude, Google Gemini, and open-source models via Ollama. The framework handles streaming responses and context management automatically.

Text-to-Speech Processors: Support for ElevenLabs, Azure TTS, Google Cloud TTS, and Cartesia. Each provides different voice characteristics and latency profiles.

Why Latency Matters in Voice AI

Human conversation has a natural rhythm. Studies show that response delays beyond 300-400 milliseconds feel unnatural and disrupt conversational flow. This is why real-time performance is critical for voice AI.

Pipecat is engineered for low latency from the ground up:

- Streaming everywhere: Audio is streamed in chunks, not collected and sent in bulk

- Parallel processing: Pipeline stages can run concurrently where possible

- Intelligent interruption: The framework detects when users start speaking and can interrupt the AI response naturally

- Voice Activity Detection (VAD): Built-in VAD ensures the system knows when to listen and when to respond

At Demogod, we leverage these capabilities to create voice agents that respond in under 500ms—fast enough that conversations feel completely natural.

Building Your First Pipecat Agent

The elegance of Pipecat becomes clear when you see how little code is needed for a functional voice agent:

from pipecat.pipeline import Pipeline

from pipecat.transports.services.daily import DailyTransport

from pipecat.services.openai import OpenAILLMService

from pipecat.services.deepgram import DeepgramSTTService

from pipecat.services.elevenlabs import ElevenLabsTTSService

# Define your services

stt = DeepgramSTTService(api_key=DEEPGRAM_KEY)

llm = OpenAILLMService(api_key=OPENAI_KEY, model="gpt-4")

tts = ElevenLabsTTSService(api_key=ELEVENLABS_KEY)

# Create the pipeline

pipeline = Pipeline([

transport.input(),

stt,

llm,

tts,

transport.output()

])

# Run it

await pipeline.run()This simplicity masks powerful functionality. The framework handles audio chunking, transcription timing, LLM context windows, TTS streaming, and network transport—all automatically.

Advanced Features for Production

Function Calling and Tool Use

Modern voice agents need to take actions, not just converse. Pipecat integrates with LLM function calling to enable agents that can:

- Look up information in databases

- Book appointments or reservations

- Process transactions

- Control smart devices

- Navigate websites (like Demogod agents do)

Vision and Multimodal Capabilities

Pipecat supports vision processors, enabling agents that can see and describe what is on screen. This is crucial for applications like:

- Product demonstration agents that can reference visual elements

- Accessibility tools that describe interfaces

- Technical support agents that can see user screens

Context Management

Long conversations require careful context management. Pipecat provides utilities for:

- Summarizing conversation history

- Maintaining relevant context within token limits

- Injecting system prompts and personality

Pipecat vs. Building From Scratch

Why use a framework when you could wire up APIs directly? The answer lies in the hidden complexity of real-time systems:

| Challenge | DIY Approach | Pipecat |

|---|---|---|

| Audio streaming | Weeks of development | Built-in |

| Interruption handling | Complex state machine | Automatic |

| Provider switching | Major refactoring | Config change |

| Latency optimization | Ongoing engineering | Optimized by default |

| WebRTC integration | Months of work | One import |

Teams that try to build voice AI from scratch typically spend 3-6 months on infrastructure before they can focus on the actual agent experience. Pipecat collapses this to days.

The Open-Source Advantage

Being open-source (Apache 2.0 license), Pipecat offers significant benefits:

- No vendor lock-in: Deploy anywhere, modify anything

- Community contributions: New integrations and improvements from the ecosystem

- Transparency: Understand exactly how your voice AI works

- Cost control: No per-minute framework fees on top of API costs

The project has gained significant traction on GitHub, with contributions from companies building everything from customer service bots to therapeutic companions.

Real-World Applications

Pipecat powers diverse voice AI applications:

Customer Service: Voice agents that handle support calls with natural conversation, reducing wait times and improving satisfaction.

Healthcare: Patient intake systems, appointment scheduling, and symptom checking with HIPAA-compliant deployments.

Education: Language tutors, interactive learning assistants, and accessibility tools for students with disabilities.

E-commerce: Product recommendation agents that guide customers through catalogs with voice, exactly like traditional salespeople.

Product Demos: At Demogod, we use Pipecat-style pipelines to power voice agents that can walk users through any website, answering questions and demonstrating features in real-time.

Getting Started with Voice AI

If you are building voice AI applications, Pipecat dramatically lowers the barrier to entry. The framework is available on PyPI and extensively documented.

For those who want to see production voice AI in action without building from scratch, try Demogod—we have already done the hard work of creating DOM-aware voice agents that can guide users through any website.

The Future of Voice AI Development

As LLMs become faster and more capable, and as speech services achieve near-human quality, voice AI is transitioning from novelty to necessity. Frameworks like Pipecat are essential infrastructure for this transition, democratizing access to technology that was previously available only to well-funded teams.

The question is no longer whether voice AI will transform how we interact with software, but how quickly. With tools like Pipecat making development accessible, that transformation is accelerating faster than anyone predicted.

Ready to add voice AI to your product? Experience Demogod and see what is possible when voice agents truly understand your website.

DEMOGOD

DEMOGOD